The software tool that KCL had built and the expertise they provided really helped us in getting our entity matching service up and running – we couldn’t have delivered the project in this timescale without that.

Alan Buxton, CTO OpenCorporates

19 April 2022

Impact story: crowdsourcing tool for matching company data

Written by Elena Simperl, Gefion Thuermer

Most governments across the world run programmes that aim to provide microenterprises and SMEs with better opportunities to compete with big business

Introduction

Most governments across the world run programmes that aim to provide microenterprises and SMEs with better opportunities to compete with big business. For the European Commission, for instance, SMEs are the ‘backbone’ of the European economy as they employ more than 100 million people. In the UK too, SMEs make up 98% of the business population. Giving SMEs better access to markets and more opportunity to compete with large firms quickly translates into higher economic growth, more employment and more prosperity.

One way of getting SMEs to participate better is to enable them to bid for public contracts. Estimates suggest that the value of global public procurement, i.e. the total of government spending on goods, services and works, exceeds $13 trillion annually, a vast sum of money. If SMEs find better ways to tap into the public procurement market the economic potential is huge. So one obvious place to start is to make public procurement more accessible.

However, there is one big problem: only fewer than 3% of public tenders and contracts are published openly. Insufficient data quality about tendering and contracting opportunities and a lack of visibility are the chief reasons why SMEs are unable to access the public procurement market as much as they would like. On top of that, it’s not always easy to find accurate and comprehensive information about competitors, large or small. For many SMEs, the picture of who they’re competing with is at best foggy. Thus, getting more SMEs to bid for public contracts means in the first instance making data about contract opportunities as well as companies more available, transparent and accessible. And this is what a team of researchers from King’s College London Department of Informatics have set out to do.

What was the challenge?

OpenCorporates, the world’s largest open database of companies, allows anyone to search for company data on their website. It’s their chief aim to provide better company data to make it easier for SMEs to discover opportunities and find out about the competition. Better company data in the public domain means more transparency and a level playing field.

In order to do this, organisations like OpenCorporates need to deal on a day-to-day basis with vast amounts of unstructured, heterogeneous data about a myriad of different companies. The data that comes in needs to be matched against the catalogue in their database. Given the sheer volume of data, this process is automated and relevant information is being extracted from text documents. However, these documents are oftentimes incomplete or outright incorrect.

To get this matching against catalogue records right, OpenCorporates employ a supervised machine learning model, which produces results with a certain interval of confidence. The problem is, what do you do when confidence is low, i.e. when your algorithm finds it difficult to match data against the catalogue in a robust way? This was a considerable challenge for OpenCorporates. Initially, they annotated a large volume of data in-house to get their algorithm off the ground but that was just enough to get started. Soon enough they required large amounts of good data to train their algorithm properly.

What exactly did we do?

As part of the EU-funded research project TheyBuyForYou, Elena Simperl, Professor of Computer Science and Dr Eddy Maddalena sat down together to build a tool that supports OpenCorporates in improving their model so that they can perform ‘entity solutions’ and publish better company data. An obvious way to make algorithms better is to show them how real people would match incomplete company data in the right way so the King’s team decided to get crowd workers involved. For this purpose, the team set up a large crowdsourcing task on Amazon’s Mechanical Turk platform. This was a complex project that requires considerable insight into task design, strategies for dealing with data quality issues as well as a sound understanding of what motivates people to complete tasks effectively and according to specifications. Above all, tasks must be designed so that the service remains cost-effective and affordable.

For the actual task, the crowd workers were provided with metadata about target companies. With this knowledge, they then matched incoming company data with the appropriate record in the catalogue. The team asked multiple workers at once to attempt the same match so that they could conclude with confidence that the matching was correct.

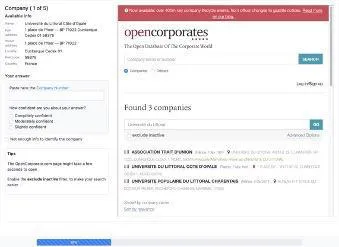

In order to communicate with Amazon’s API, the King's team built a tool that handles interactions via IPython notebooks. The tool leveraged insights from crowdsourcing research around motivations and incentives of crowd workers published by Professor Simperl and her team. Here is a link to the GitHub page of the tool and below a screenshot of what it looks like:

What was the impact?

The tool proved integral to the TheyBuyForYou project and was greeted with great enthusiasm. Following a series of pilot tests that produced great results, the tool was actively employed in OpenCorporates’s daily business routine over several months. Overall, OpenCorporates used more than 16,000 records that the King's team had generated using the crowdsourcing tool to train their algorithm. In some instances, Open Corporates would only know for a handful of cases that their records were absolutely correct, which made the crowdsourcing tool a valuable resource to produce good algorithmic training data. Ultimately, the tool has given OpenCorporates’ entity matching reconciliation service a big push and helped them publish better company data, which feeds right into making public procurement more transparent and accessible for all.