Established in 2007 within the Department of Engineering, The Centre for Robotics Research (CORE) is a leading hub for multidisciplinary robotics innovation.

Integrating expertise from mechanical engineering, electronics, computer science, AI, and systems design, CORE addresses real-world challenges through cross-sector collaboration.

With partnerships across manufacturing, healthcare, automotive, food, and space industries, the centre fosters global impact through a network of 100+ academic and industrial collaborators. It features over 350m² of dedicated robotics lab space as part of a £40 million engineering investment.

CORE has contributed to over £30 million in R&D projects funded by major bodies including UKRI, EU, RAEng, RS, UKSA, and ESA.

Priorities

Enabling sustainable solutions

Our research advances the robotics and autonomous systems that contribute to sustainable practices across industries, from manufacturing and space to healthcare.

Innovating ethical robotics

We commit to ethical considerations, researching robotics with a focus on responsibility, transparency and inclusivity.

Interdisciplinary excellence

We aim to lead in interdisciplinary research, fostering collaborations that transcend traditional boundaries and amplify the impact of robotic advancements.

Empowering future innovators

Through cutting-edge educational programs (such as CORE-led MSc and PhD in Robotics), we aspire to empower the next generation of robotics leaders, equipping them with the skills and mindset needed to tackle future challenges.

Global thought leadership

We envision our research and educational initiatives contributing to global thought leadership in robotics, influencing the discourse and shaping the trajectory of this dynamic field.

Themes

Structure

The design of the physical structure comprising a robot’s body is key to enabling motion, balance and manipulation. Robots that can change body shape, such as ‘metamorphic’ and ‘soft body’ robots, provide unique flexibility to navigate uneven surfaces and constrained spaces, while manipulators that can change can grasp irregular objects and perform dexterous tasks. Why is it hard for a robot to walk? Robots need to carry a processor and power supply, as well as sensors, and these components can be heavy and awkward. Metamorphic or ‘origami’ designs allow a robot’s structure to rebalance as it moves, making walking more tractable. Why is it difficult for a robot to grasp? Picking up objects requires a complex combination of vision and touch. Creating robots that can grasp, hold, tilt, and push objects with just the right amount of strength brings robots closer to human-like dexterity, and greatly increases their usefulness.

Sensing

Robotic sensing gives robots the ability to see, touch, hear and move around with the help of environmental feedback. Robotic sensors may be analogous to human sensors, or may allow robots to sense things that humans cannot. Sensing can be ‘local’, where robots are in the same physical space as human controllers or collaborators. ‘Haptic’ sensing is one type of local sensing. Haptic data can enable a robot to interact successfully with the physical world. Researchers in this area try to understand how people make use of touch and apply this understanding to develop robots that perform more accurately – possibly saving lives in the process! Sensing can also be ‘remote’, where robots are located away from humans. Remote sensing includes medical imaging sensors that are attached to probes and employed for surgical procedures, chemical sensors that are attached to mobile robots and employed for detecting explosives, and environment sensors that are attached to drones (unmanned aerial vehicles, or UAVs) and are employed for observing and modelling the earth.

Motion

Walking across a room without crashing into furniture, crossing a crowded street without bumping into others or lifting a coffee cup from a table to one’s lips are tasks performed by most people without much conscious thought. But a robot has to choreograph its every move. Motion can be engineered, using kinematic equations that design trajectories for leg, arm or wheel actions, or planned, using artificial intelligence to determine sets of actions and adjust in real-time if feedback indicates that actions were not executed as planned. The path or trajectory of such motion should be designed effectively, so that a robot achieves its goal, and efficiently, so that it does not waste energy, a precious resource for any robot.

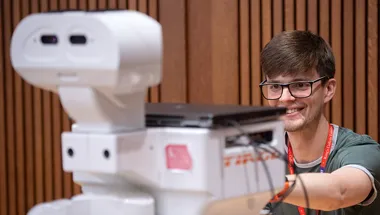

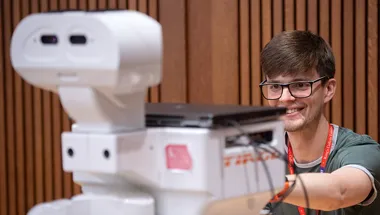

Human-Robot Interaction

Human-robot interaction (HRI) research attempts to study the expectations and reactions of humans with respect to robots so as to ensure effective interactions. This can range from how workers behave when controlling robots remotely, to how humans trapped in a collapsed building might respond to a rescue robot, to how people can make decisions jointly with intelligent robots. HRI research is integrated within much of our robotics research: if we know how humans are likely to respond, we can build robots that fit in with that behaviour.

Learning

Learning is how robots can acquire skills or adapt to environments beyond their pre-programmed behaviours. Robots can learn in many ways – by reinforcement, by imitation, and autonomously, for example. As such methods develop, new applications for robotics are emerging that aim to bring AI (artificial intelligence) into the ‘real world’. ‘Reinforcement Learning’ techniques associate a reward with specific outcomes due to robot actions and involve an iterative process in which a robot learns to maximise its reward through sequences of trial-and-error actions. ‘Learning from Demonstration’ techniques offer human-in-the-loop training, where a robot learns to imitate the actions of a human demonstrator. ‘Statistical Machine Learning’ techniques, such as artificial neural networks, allow a robot to detect and recognise patterns in its environment and develop appropriate responses without guidance from a human teacher.

Multi-Robot Systems

In multi-robot systems, robots coordinate with each other to perform complex tasks that might be difficult or inefficient for a single robot. This often involves dispatching small sub-problems to individual robots and allowing them to interact with each other to find solutions. In heterogeneous robot teams, different robots have different capabilities, so the coordination becomes more constrained and hence, more complex. Multi-robot systems have a wide set of applications, from rescue missions to delivery of payloads in a warehouse.

Publications

Training People to Reward Robots

Sun, E., Howard, M. & Zhu, Y., 2025, (Accepted/In press) IEEE/RSJ International Conference on Intelligent Robots and Systems.Research output: Chapter in Book/Report/Conference proceeding › Conference paper › peer-review

Redundant structure based multi-motor servo system fuzzy adaptive fault-tolerant control via unbalanced torque compensation

Wang, B., Yu, J., Xin, H., Cai, M., Lam, H.-K. & Yu, J., 12 Jun 2025, In: IEEE Transactions on Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

Customized Non-Monotonic Prescribed Performance Control for Stochastic MEMS Gyroscopes with Insufficient Input Capability

Xia, Y., Xiao, K., Cao, J., Lam, H.-K., Precup, R.-E., Rutkowski, L. & Agarwal, R. K., 14 May 2025, In: IEEE Transactions on Circuits and Systems I: Regular Papers.Research output: Contribution to journal › Article › peer-review

Further study on stability analysis for Markov linear parameter-varying systems

Wang, L. & Lam, H.-K., 31 May 2025, In: IEEE Transactions on Automatic Control.Research output: Contribution to journal › Article › peer-review

Adaptive Order-Reduction Output-Feedback System for DC-DC Power Converters Using Feedback-Loop Intelligentification

Kim, S.-K., Lim, S., Lam, H.-K. & Ahn, C. K., 6 Jun 2025, In: IEEE TRANSACTIONS ON INDUSTRIAL ELECTRONICS.Research output: Contribution to journal › Article › peer-review

Finite-time fault detection for stochastic nonlinear networked control systems via interval type-2 T-S fuzzy framework

Zeng, Y., Wang, Z., Wu, L. & Lam, H.-K., 24 Feb 2025, In: NONLINEAR DYNAMICS. 113, 13, p. 16493-16510 18 p.Research output: Contribution to journal › Article › peer-review

Adaptive Fuzzy Predefined-Time Cooperative Formation Control for Multiple USVs With Universal Global Performance Constraints

Song, X., Wu, C., Lam, H.-K., Wang, X. & Song, S., 14 Mar 2025, (E-pub ahead of print) In: IEEE TRANSACTIONS ON INTELLIGENT TRANSPORTATION SYSTEMS.Research output: Contribution to journal › Article › peer-review

Average filtering error-based event-triggered fuzzy filter design with adjustable gains for networked control systems

Pan, Y., Huang, F., Li, T. & Lam, H.-K., 28 Feb 2025, (E-pub ahead of print) In: IEEE Transactions on Fuzzy Systems. 33, 6, p. 1963-1976 14 p.Research output: Contribution to journal › Article › peer-review

Learning Probabilistic Logical Control Networks: From Data to Controllability and Observability

Lin, L., Lam, J., Shi, P., Ng, M. K. & Lam, H.-K., 13 Dec 2024, In: IEEE Transactions on Automatic Control.Research output: Contribution to journal › Article › peer-review

Closure to "Discussion: Selective-Compliance-Based Lagrange Model and Multilevel Noncollocated Feedback Control of a Humanoid Robot"

Spyrakos-Papastavridis, E., Dai, J. S., Childs, P. R. N. & Tsagarakis, N. G., Oct 2022, In: Journal of Mechanisms and Robotics . 14, 5, 056001.Research output: Contribution to journal › Article › peer-review

Minimum Friction Coefficient-Based Precision Manipulation Workspace Analysis of the Three-Fingered Metamorphic Hand

Lin, Y. H., Wang, T., Spyrakos-Papastavridis, E., Fu, Z., Xu, S. & Dai, J. S., 1 Oct 2023, In: Journal of Mechanisms and Robotics . 15, 5, 051018.Research output: Contribution to journal › Article › peer-review

Dynamic modeling of wheeled biped robot and controller design for reducing chassis tilt angle

Mao, N., Chen, J., Spyrakos-Papastavridis, E. & Dai, J. S., 1 Aug 2024, In: Robotica. 42, 8, p. 2713-2741 29 p.Research output: Contribution to journal › Article › peer-review

Recursive Estimator-Based Fuzzy Adaptive Control for Discrete-Time Uncertain Systems with State Saturations and Missing Measurements

Shi, W., Liu, J., Lam, H.-K. & Yu, J., Nov 2024, In: IEEE Transactions on Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

Stabilization of Interval Type-2 Polynomial Fuzzy Networked Control Systems under Cyber-Attacks

Xiao, B., Lam, H.-K., Sun, L., Chen, S., Yu, Z., Li, T., Zhu, Z. & Yeatman, E., 16 Sept 2024, (Accepted/In press) In: IEEE Transactions on Industrial Cyber-Physical Systems.Research output: Contribution to journal › Article › peer-review

Parameter-Optimization-Based Adaptive Fault-Tolerant Control for a Quadrotor UAV Using Fuzzy Disturbance Observers

Ren, Y., Sun, Y., Liu, Z. & Lam, H.-K., Oct 2024, (Accepted/In press) In: IEEE Transactions on Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

Hierarchical fuzzy model-agnostic explanation: framework, algorithms and interface for XAI

Yin, F., Lam, H.-K. & Watson, D., 18 Oct 2024, (Accepted/In press) In: IEEE Transactions on Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

Estimation of the Domain of Attraction for Continuous-Time Saturated Positive Polynomial Fuzzy Systems Based on Novel Analysis and Convexification Strategies

Han, M., Huang, Y., Lam, H.-K., Guo, G. & Wang, Z., 16 Oct 2024, In: Fuzzy Sets and Systems. 498, 109155.Research output: Contribution to journal › Article › peer-review

Ventricular Arrhythmia Classification using Similarity Maps and Hierarchical Multi-Stream Deep Learning

Lin, Q., Oglic, D., Curtis, M., Lam, H.-K. & Cvetkovic, Z., 16 Oct 2024, (Accepted/In press) In: IEEE Transactions on Biomedical Engineering. 13 p.Research output: Contribution to journal › Article › peer-review

Relaxed stability and non-weighted L2-gain analysis for asynchronously switched polynomial fuzzy systems

Bao, Z., Lam, H.-K., Li, X. & Liu, F., 4 Aug 2024, (Accepted/In press) In: International Journal Of Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

Human Movement Prediction with Wearable Sensors on Loose Clothing

Shen, T., Di Giulio, I. & Howard, M., 9 Sept 2024, IEEE-RAS Int. Conf. on Humanoid Robots (HUMANOIDS) 2024.Research output: Chapter in Book/Report/Conference proceeding › Conference paper › peer-review

Adaptive Fuzzy Finite-Time Singular Perturbation Control for Flexible Joint Manipulators With State Constraints

Rui, Q., Lam, H.-K., Liu, J. & Yu, J., 16 Sept 2024, In: IEEE Transactions on Systems, Man, and Cybernetics: Systems. 54, 12, p. 7521-7527 7 p.Research output: Contribution to journal › Article › peer-review

Finite-Time Asynchronous Switching Control for Fuzzy Markov Jump Systems by Applying Polynomial Membership Functions

Zhao, Y., Wang, L., Xie, X. & Lam, H.-K., 30 Aug 2024, In: to appear in IEEE Transactions on Circuits and Systems-I: Regular Papers. 71, 12, p. 5607-5617 11 p.Research output: Contribution to journal › Article › peer-review

Short-term load forecasting: cascade intuitionistic fuzzy time series-univariate and bivariate models

Yolcu, O. C., Lam, H.-K. & Yolcu, U., 29 Jul 2024, In: NEURAL COMPUTING AND APPLICATIONS. 36, 32, p. 20167-20192 26 p.Research output: Contribution to journal › Article › peer-review

Switched command-filtered-based adaptive fuzzy output-feedback funnel control for switched nonlinear MIMO delayed systems

Li, Z., Chen, H., Lam, H.-K., Wu, W. & Zhang, W., 2024, In: IEEE Transactions on Fuzzy Systems. 32, 11, p. 6560-6572 13 p.Research output: Contribution to journal › Article › peer-review

Reinforcement Learning for Fuzzy Structured Adaptive Optimal Control of Discrete-Time Nonlinear Complex Networks

Wu, T., Cao, J., Xiong, L., Park, J.-H. & Lam, H.-K., 2024, In: IEEE Transactions on Fuzzy Systems. 32, 11, p. 6035-6043 9 p.Research output: Contribution to journal › Article › peer-review

Boundary output tracking of nonlinear parabolic differential systems via fuzzy PID control

Zhang, J.-F., Wang, J. W., Lam, H.-K. & Li, H. X., 2024, In: IEEE Transactions on Fuzzy Systems. 32, 12, p. 6863-6877 15 p.Research output: Contribution to journal › Article › peer-review

Command Filter-Based Finite-Time Constraint Control for Flexible Joint Robots Stochastic System with Unknown Dead Zones

Dong, Y., Lam, H.-K., Liu, J. & Yu, J., 17 Jul 2024, In: IEEE Transactions on Fuzzy Systems. 32, 10, p. 5836-5844 9 p.Research output: Contribution to journal › Article › peer-review

A Probabilistic Model of Activity Recognition with Loose Clothing

Shen, T., Di Giulio, I. & Howard, M., 2023, In: Proceedings - IEEE International Conference on Robotics and Automation. p. 12659-12664 6 p.Research output: Contribution to journal › Article › peer-review

Fast Finite-Time Formation Control of UAVs With Multiple Loops and External Disturbances

Huang, W., Guo, Y., Ran, G., Lam, H.-K., Liu, J. & Wang, B., 1 Jul 2024, In: IEEE Transactions on Intelligent Vehicles.Research output: Contribution to journal › Article › peer-review

Q-Learning & Economic NL-MPC for Continuous Biomass Fermentation

Vinestock, T., Lam, H.-K., Taylor, M. & Guo, M., 26 Jun 2024, Computer Aided Chemical Engineering. Vol. 53. p. 1807-1812 6 p. (Computer Aided Chemical Engineering; vol. 53).Research output: Chapter in Book/Report/Conference proceeding › Chapter › peer-review

Event-Triggered Disturbance Rejection Control for Brain-Actuated Mobile Robot: An SSA-Optimized Sliding Mode Approach

Zhu, Z., Song, J., He, S., Lam, H.-K. & Liu, J. J. R., 10 May 2024, (Accepted/In press) In: IEEE ASME TRANSACTIONS ON MECHATRONICS. p. 1-12 12 p.Research output: Contribution to journal › Article › peer-review

Stability analysis of interval type-2 sampled-data polynomial fuzzy-model-based control system with a switching control scheme

Chen, M., Lam, H.-K., Xiao, B., Zhou, H. & Xuan, C., 10 May 2024, In: NONLINEAR DYNAMICS. 112, 13, p. 11111-11126 16 p.Research output: Contribution to journal › Article › peer-review

A Hybrid GCN-LSTM Model for Ventricular Arrhythmia Classification Based on ECG Pattern Similarity

Lin, Q., Oglic, D., Lam, H.-K., Curtis, M. & Cvetkovic, Z., 15 Apr 2024, (Accepted/In press) 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC 2024: IEEE. 4 p.Research output: Chapter in Book/Report/Conference proceeding › Conference paper › peer-review

Stiffness evaluation of a novel ankle rehabilitation exoskeleton with a type-variable constraint

Wang, T., Lin, Y. H., Spyrakos-Papastavridis, E., Xie, S. Q. & Dai, J. S., Jan 2023, In: Mechanism and machine theory. 179, 105071.Research output: Contribution to journal › Article › peer-review

Fuzzy Observer-based Command Filtered Adaptive Control of Flexible Joint Robots with Time-varying Output Constraints

Su, J., Lam, H.-K., Liu, J. & Yu, J., 11 Mar 2024, In: IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS II-EXPRESS BRIEFS. 71, 9, p. 4251-4255 5 p.Research output: Contribution to journal › Article › peer-review

Anti-Attack Protocol-Based Synchronization Control for Fuzzy Complex Dynamic Networks

Wu, T., Ahn, C. K., Cao, J., Xiong, L., Liu, Y. & Lam, H.-K., 25 Feb 2024, In: IEEE Transactions on Fuzzy Systems. 32, 6, p. 3433-3443 11 p.Research output: Contribution to journal › Article › peer-review

Fuzzy Neural Network-Based Adaptive Sliding-Mode Descriptor Observer

Zhong, Z., Lam, H.-K., Basin, M. V. & Zeng, X., 1 Jun 2024, In: IEEE Transactions on Fuzzy Systems. 32, 6, p. 3342-3354 13 p.Research output: Contribution to journal › Article › peer-review

Observer-based fuzzy tracking control for an unmanned aerial vehicle with communication constraints

Kong, L., Liu, Z., Zhao, Z. & Lam, H.-K., 1 Jun 2024, In: IEEE Transactions on Fuzzy Systems. 32, 6, p. 3368-3380 13 p.Research output: Contribution to journal › Article › peer-review

Synchronous MDADT-Based Fuzzy Adaptive Tracking Control for Switched Multiagent Systems via Modified Self-Triggered Mechanism

Liang, H., Wang, W., Pan, Y., Lam, H.-K. & Sun, J., 9 Feb 2024, In: IEEE Transactions on Fuzzy Systems. 32, 5, p. 2876-2889 14 p.Research output: Contribution to journal › Article › peer-review

New stability criterion for positive impulsive fuzzy systems by applying polynomial impulse-time-dependent method

Wang, L., Zheng, B. & Lam, H.-K., 10 Feb 2024, In: IEEE Transactions on Cybernetics. 54, 9, p. 5473-5482 10 p.Research output: Contribution to journal › Article › peer-review

Integrated Fault-Tolerant Control Design With Sampled-Output Measurements for Interval Type-2 Takagi-Sugeno Fuzzy Systems

Zhou, H., Lam, H.-K., Xiao, B. & Xuan, C., 2024, In: IEEE Transactions on Cybernetics. 54, 9, p. 5068-5077 10 p.Research output: Contribution to journal › Article › peer-review

Polynomial Fuzzy Observer-Based Feedback Control for Nonlinear Hyperbolic PDEs Systems

Tsai, S.-H., Lee, W.-H., Tanaka, K., Chen, Y.-J. & Lam, H.-K., 7 Feb 2024, In: IEEE Transactions on Cybernetics. 54, 9, p. 5257-5269 13 p.Research output: Contribution to journal › Article › peer-review

Double Asynchronous Switching Control for Takagi–Sugeno Fuzzy Markov Jump Systems via Adaptive Event-Triggered Mechanism

Zhao, Y., Wang, L., Xie, X., Hou, J. & Lam, H.-K., 1 Feb 2024, In: IEEE Transactions on Systems, Man, and Cybernetics: Systems. 54, 5, p. 2978-2989 12 p.Research output: Contribution to journal › Article › peer-review

Spatiotemporal Fuzzy-Observer-based Feedback Control for Networked Parabolic PDE Systems

Wang, J. W., Feng, Y., Dubljevic, S. & Lam, H.-K., 1 May 2024, In: IEEE Transactions on Fuzzy Systems. 32, 5, p. 2625-2638 14 p.Research output: Contribution to journal › Article › peer-review

Asynchronous switching control for fuzzy Markov jump systems with periodically varying delay and its application to electronic circuits

Zhao, Y., Wang, L., Xie, X., Lam, H.-K. & Gu, J., 16 Jan 2024, In: IEEE TRANSACTIONS ON AUTOMATION SCIENCE AND ENGINEERING. p. 1-12 12 p.Research output: Contribution to journal › Article › peer-review

An Obstacle Avoidance-Specific Reinforcement Learning Method Based on Fuzzy Attention Mechanism and Heterogeneous Graph Neural Networks

Zhang, F., Xuan, C. & Lam, H.-K., 19 Dec 2023, (Accepted/In press) In: ENGINEERING APPLICATIONS OF ARTIFICIAL INTELLIGENCE.Research output: Contribution to journal › Article › peer-review

Fuzzy SMC for discrete nonlinear singularly perturbed models with semi-Markovian switching parameters

Qi, W., Cheng, J., Park, J.-H. & Lam, H.-K., 20 Nov 2023, (Accepted/In press) In: IEEE Transactions on Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

LMIs-based exponential stabilization for interval delay systems via congruence transformation: Application in chaotic Lorenz system

Zheng, W., Zhang, Z., Lam, H.-K., Sun, F. & Wen, S., Nov 2023, In: CHAOS SOLITONS AND FRACTALS. 176, 114060.Research output: Contribution to journal › Article › peer-review

Stability analysis and L2-gain control for positive fuzzy systems by applying a membership-function-dependent Lyapunov function

Zheng, B., Wang, L., Xie, X. & Lam, H.-K., 1 Nov 2023, In: NONLINEAR DYNAMICS. 111, 24, p. 22255-22265 11 p.Research output: Contribution to journal › Article › peer-review

Stability and Stabilization of Fuzzy Event-Triggered Control for Positive Nonlinear Systems

Wang, Z., Meng, A., Lam, H.-K., Xiao, B. & Li, Z., 1 Nov 2023, In: International Journal Of Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

News

King's Culture announces Creative Practice Catalyst Seed Fund recipients

King's Culture announce the recipients of a new creative practice seed fund, enabling researchers and academics to consolidate relationships with partners in...

“Interactive, fascinating, new experiences”: King's inspires future engineers at Success for Black Students outreach days

Academics, students and technicians came together to bring engineering to life for 64 London highschoolers at two Success for Black Students events.

King's welcomes The Princess Royal for official opening of the Quad

HRH The Princess Royal, in her role as Chancellor of the University of London, officially opened the Quadrangle (Quad) building at King’s College London.

Former Paralympian builds brighter future for prosthetic limb users with King's

Britain’s former youngest Paralympian tested prototype prosthetic arms to create better designs

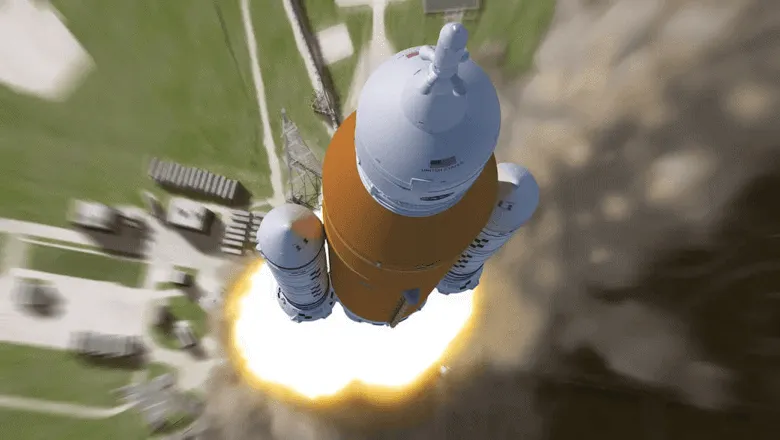

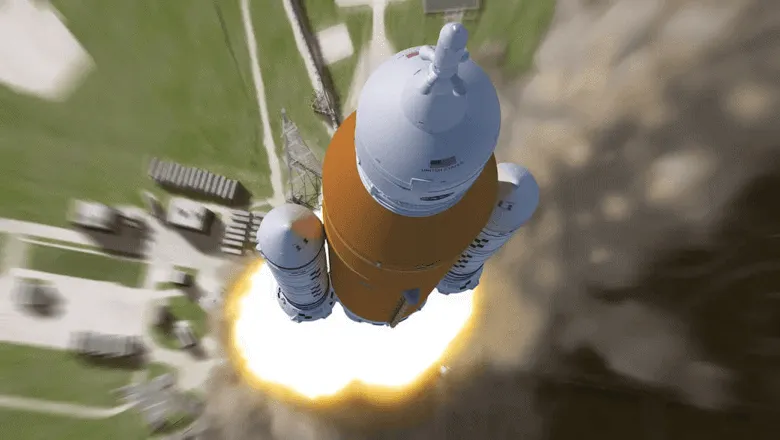

COMMENT: NASA/MSFC Trump may cancel Nasa's powerful SLS Moon rocket – here's what that would mean for Elon Musk and the future of space travel

Professor Yang Gao explores the implications of cancelling the SLS moon rocket.

Themes

Structure

The design of the physical structure comprising a robot’s body is key to enabling motion, balance and manipulation. Robots that can change body shape, such as ‘metamorphic’ and ‘soft body’ robots, provide unique flexibility to navigate uneven surfaces and constrained spaces, while manipulators that can change can grasp irregular objects and perform dexterous tasks. Why is it hard for a robot to walk? Robots need to carry a processor and power supply, as well as sensors, and these components can be heavy and awkward. Metamorphic or ‘origami’ designs allow a robot’s structure to rebalance as it moves, making walking more tractable. Why is it difficult for a robot to grasp? Picking up objects requires a complex combination of vision and touch. Creating robots that can grasp, hold, tilt, and push objects with just the right amount of strength brings robots closer to human-like dexterity, and greatly increases their usefulness.

Sensing

Robotic sensing gives robots the ability to see, touch, hear and move around with the help of environmental feedback. Robotic sensors may be analogous to human sensors, or may allow robots to sense things that humans cannot. Sensing can be ‘local’, where robots are in the same physical space as human controllers or collaborators. ‘Haptic’ sensing is one type of local sensing. Haptic data can enable a robot to interact successfully with the physical world. Researchers in this area try to understand how people make use of touch and apply this understanding to develop robots that perform more accurately – possibly saving lives in the process! Sensing can also be ‘remote’, where robots are located away from humans. Remote sensing includes medical imaging sensors that are attached to probes and employed for surgical procedures, chemical sensors that are attached to mobile robots and employed for detecting explosives, and environment sensors that are attached to drones (unmanned aerial vehicles, or UAVs) and are employed for observing and modelling the earth.

Motion

Walking across a room without crashing into furniture, crossing a crowded street without bumping into others or lifting a coffee cup from a table to one’s lips are tasks performed by most people without much conscious thought. But a robot has to choreograph its every move. Motion can be engineered, using kinematic equations that design trajectories for leg, arm or wheel actions, or planned, using artificial intelligence to determine sets of actions and adjust in real-time if feedback indicates that actions were not executed as planned. The path or trajectory of such motion should be designed effectively, so that a robot achieves its goal, and efficiently, so that it does not waste energy, a precious resource for any robot.

Human-Robot Interaction

Human-robot interaction (HRI) research attempts to study the expectations and reactions of humans with respect to robots so as to ensure effective interactions. This can range from how workers behave when controlling robots remotely, to how humans trapped in a collapsed building might respond to a rescue robot, to how people can make decisions jointly with intelligent robots. HRI research is integrated within much of our robotics research: if we know how humans are likely to respond, we can build robots that fit in with that behaviour.

Learning

Learning is how robots can acquire skills or adapt to environments beyond their pre-programmed behaviours. Robots can learn in many ways – by reinforcement, by imitation, and autonomously, for example. As such methods develop, new applications for robotics are emerging that aim to bring AI (artificial intelligence) into the ‘real world’. ‘Reinforcement Learning’ techniques associate a reward with specific outcomes due to robot actions and involve an iterative process in which a robot learns to maximise its reward through sequences of trial-and-error actions. ‘Learning from Demonstration’ techniques offer human-in-the-loop training, where a robot learns to imitate the actions of a human demonstrator. ‘Statistical Machine Learning’ techniques, such as artificial neural networks, allow a robot to detect and recognise patterns in its environment and develop appropriate responses without guidance from a human teacher.

Multi-Robot Systems

In multi-robot systems, robots coordinate with each other to perform complex tasks that might be difficult or inefficient for a single robot. This often involves dispatching small sub-problems to individual robots and allowing them to interact with each other to find solutions. In heterogeneous robot teams, different robots have different capabilities, so the coordination becomes more constrained and hence, more complex. Multi-robot systems have a wide set of applications, from rescue missions to delivery of payloads in a warehouse.

Publications

Training People to Reward Robots

Sun, E., Howard, M. & Zhu, Y., 2025, (Accepted/In press) IEEE/RSJ International Conference on Intelligent Robots and Systems.Research output: Chapter in Book/Report/Conference proceeding › Conference paper › peer-review

Redundant structure based multi-motor servo system fuzzy adaptive fault-tolerant control via unbalanced torque compensation

Wang, B., Yu, J., Xin, H., Cai, M., Lam, H.-K. & Yu, J., 12 Jun 2025, In: IEEE Transactions on Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

Customized Non-Monotonic Prescribed Performance Control for Stochastic MEMS Gyroscopes with Insufficient Input Capability

Xia, Y., Xiao, K., Cao, J., Lam, H.-K., Precup, R.-E., Rutkowski, L. & Agarwal, R. K., 14 May 2025, In: IEEE Transactions on Circuits and Systems I: Regular Papers.Research output: Contribution to journal › Article › peer-review

Further study on stability analysis for Markov linear parameter-varying systems

Wang, L. & Lam, H.-K., 31 May 2025, In: IEEE Transactions on Automatic Control.Research output: Contribution to journal › Article › peer-review

Adaptive Order-Reduction Output-Feedback System for DC-DC Power Converters Using Feedback-Loop Intelligentification

Kim, S.-K., Lim, S., Lam, H.-K. & Ahn, C. K., 6 Jun 2025, In: IEEE TRANSACTIONS ON INDUSTRIAL ELECTRONICS.Research output: Contribution to journal › Article › peer-review

Finite-time fault detection for stochastic nonlinear networked control systems via interval type-2 T-S fuzzy framework

Zeng, Y., Wang, Z., Wu, L. & Lam, H.-K., 24 Feb 2025, In: NONLINEAR DYNAMICS. 113, 13, p. 16493-16510 18 p.Research output: Contribution to journal › Article › peer-review

Adaptive Fuzzy Predefined-Time Cooperative Formation Control for Multiple USVs With Universal Global Performance Constraints

Song, X., Wu, C., Lam, H.-K., Wang, X. & Song, S., 14 Mar 2025, (E-pub ahead of print) In: IEEE TRANSACTIONS ON INTELLIGENT TRANSPORTATION SYSTEMS.Research output: Contribution to journal › Article › peer-review

Average filtering error-based event-triggered fuzzy filter design with adjustable gains for networked control systems

Pan, Y., Huang, F., Li, T. & Lam, H.-K., 28 Feb 2025, (E-pub ahead of print) In: IEEE Transactions on Fuzzy Systems. 33, 6, p. 1963-1976 14 p.Research output: Contribution to journal › Article › peer-review

Learning Probabilistic Logical Control Networks: From Data to Controllability and Observability

Lin, L., Lam, J., Shi, P., Ng, M. K. & Lam, H.-K., 13 Dec 2024, In: IEEE Transactions on Automatic Control.Research output: Contribution to journal › Article › peer-review

Closure to "Discussion: Selective-Compliance-Based Lagrange Model and Multilevel Noncollocated Feedback Control of a Humanoid Robot"

Spyrakos-Papastavridis, E., Dai, J. S., Childs, P. R. N. & Tsagarakis, N. G., Oct 2022, In: Journal of Mechanisms and Robotics . 14, 5, 056001.Research output: Contribution to journal › Article › peer-review

Minimum Friction Coefficient-Based Precision Manipulation Workspace Analysis of the Three-Fingered Metamorphic Hand

Lin, Y. H., Wang, T., Spyrakos-Papastavridis, E., Fu, Z., Xu, S. & Dai, J. S., 1 Oct 2023, In: Journal of Mechanisms and Robotics . 15, 5, 051018.Research output: Contribution to journal › Article › peer-review

Dynamic modeling of wheeled biped robot and controller design for reducing chassis tilt angle

Mao, N., Chen, J., Spyrakos-Papastavridis, E. & Dai, J. S., 1 Aug 2024, In: Robotica. 42, 8, p. 2713-2741 29 p.Research output: Contribution to journal › Article › peer-review

Recursive Estimator-Based Fuzzy Adaptive Control for Discrete-Time Uncertain Systems with State Saturations and Missing Measurements

Shi, W., Liu, J., Lam, H.-K. & Yu, J., Nov 2024, In: IEEE Transactions on Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

Stabilization of Interval Type-2 Polynomial Fuzzy Networked Control Systems under Cyber-Attacks

Xiao, B., Lam, H.-K., Sun, L., Chen, S., Yu, Z., Li, T., Zhu, Z. & Yeatman, E., 16 Sept 2024, (Accepted/In press) In: IEEE Transactions on Industrial Cyber-Physical Systems.Research output: Contribution to journal › Article › peer-review

Parameter-Optimization-Based Adaptive Fault-Tolerant Control for a Quadrotor UAV Using Fuzzy Disturbance Observers

Ren, Y., Sun, Y., Liu, Z. & Lam, H.-K., Oct 2024, (Accepted/In press) In: IEEE Transactions on Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

Hierarchical fuzzy model-agnostic explanation: framework, algorithms and interface for XAI

Yin, F., Lam, H.-K. & Watson, D., 18 Oct 2024, (Accepted/In press) In: IEEE Transactions on Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

Estimation of the Domain of Attraction for Continuous-Time Saturated Positive Polynomial Fuzzy Systems Based on Novel Analysis and Convexification Strategies

Han, M., Huang, Y., Lam, H.-K., Guo, G. & Wang, Z., 16 Oct 2024, In: Fuzzy Sets and Systems. 498, 109155.Research output: Contribution to journal › Article › peer-review

Ventricular Arrhythmia Classification using Similarity Maps and Hierarchical Multi-Stream Deep Learning

Lin, Q., Oglic, D., Curtis, M., Lam, H.-K. & Cvetkovic, Z., 16 Oct 2024, (Accepted/In press) In: IEEE Transactions on Biomedical Engineering. 13 p.Research output: Contribution to journal › Article › peer-review

Relaxed stability and non-weighted L2-gain analysis for asynchronously switched polynomial fuzzy systems

Bao, Z., Lam, H.-K., Li, X. & Liu, F., 4 Aug 2024, (Accepted/In press) In: International Journal Of Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

Human Movement Prediction with Wearable Sensors on Loose Clothing

Shen, T., Di Giulio, I. & Howard, M., 9 Sept 2024, IEEE-RAS Int. Conf. on Humanoid Robots (HUMANOIDS) 2024.Research output: Chapter in Book/Report/Conference proceeding › Conference paper › peer-review

Adaptive Fuzzy Finite-Time Singular Perturbation Control for Flexible Joint Manipulators With State Constraints

Rui, Q., Lam, H.-K., Liu, J. & Yu, J., 16 Sept 2024, In: IEEE Transactions on Systems, Man, and Cybernetics: Systems. 54, 12, p. 7521-7527 7 p.Research output: Contribution to journal › Article › peer-review

Finite-Time Asynchronous Switching Control for Fuzzy Markov Jump Systems by Applying Polynomial Membership Functions

Zhao, Y., Wang, L., Xie, X. & Lam, H.-K., 30 Aug 2024, In: to appear in IEEE Transactions on Circuits and Systems-I: Regular Papers. 71, 12, p. 5607-5617 11 p.Research output: Contribution to journal › Article › peer-review

Short-term load forecasting: cascade intuitionistic fuzzy time series-univariate and bivariate models

Yolcu, O. C., Lam, H.-K. & Yolcu, U., 29 Jul 2024, In: NEURAL COMPUTING AND APPLICATIONS. 36, 32, p. 20167-20192 26 p.Research output: Contribution to journal › Article › peer-review

Switched command-filtered-based adaptive fuzzy output-feedback funnel control for switched nonlinear MIMO delayed systems

Li, Z., Chen, H., Lam, H.-K., Wu, W. & Zhang, W., 2024, In: IEEE Transactions on Fuzzy Systems. 32, 11, p. 6560-6572 13 p.Research output: Contribution to journal › Article › peer-review

Reinforcement Learning for Fuzzy Structured Adaptive Optimal Control of Discrete-Time Nonlinear Complex Networks

Wu, T., Cao, J., Xiong, L., Park, J.-H. & Lam, H.-K., 2024, In: IEEE Transactions on Fuzzy Systems. 32, 11, p. 6035-6043 9 p.Research output: Contribution to journal › Article › peer-review

Boundary output tracking of nonlinear parabolic differential systems via fuzzy PID control

Zhang, J.-F., Wang, J. W., Lam, H.-K. & Li, H. X., 2024, In: IEEE Transactions on Fuzzy Systems. 32, 12, p. 6863-6877 15 p.Research output: Contribution to journal › Article › peer-review

Command Filter-Based Finite-Time Constraint Control for Flexible Joint Robots Stochastic System with Unknown Dead Zones

Dong, Y., Lam, H.-K., Liu, J. & Yu, J., 17 Jul 2024, In: IEEE Transactions on Fuzzy Systems. 32, 10, p. 5836-5844 9 p.Research output: Contribution to journal › Article › peer-review

A Probabilistic Model of Activity Recognition with Loose Clothing

Shen, T., Di Giulio, I. & Howard, M., 2023, In: Proceedings - IEEE International Conference on Robotics and Automation. p. 12659-12664 6 p.Research output: Contribution to journal › Article › peer-review

Fast Finite-Time Formation Control of UAVs With Multiple Loops and External Disturbances

Huang, W., Guo, Y., Ran, G., Lam, H.-K., Liu, J. & Wang, B., 1 Jul 2024, In: IEEE Transactions on Intelligent Vehicles.Research output: Contribution to journal › Article › peer-review

Q-Learning & Economic NL-MPC for Continuous Biomass Fermentation

Vinestock, T., Lam, H.-K., Taylor, M. & Guo, M., 26 Jun 2024, Computer Aided Chemical Engineering. Vol. 53. p. 1807-1812 6 p. (Computer Aided Chemical Engineering; vol. 53).Research output: Chapter in Book/Report/Conference proceeding › Chapter › peer-review

Event-Triggered Disturbance Rejection Control for Brain-Actuated Mobile Robot: An SSA-Optimized Sliding Mode Approach

Zhu, Z., Song, J., He, S., Lam, H.-K. & Liu, J. J. R., 10 May 2024, (Accepted/In press) In: IEEE ASME TRANSACTIONS ON MECHATRONICS. p. 1-12 12 p.Research output: Contribution to journal › Article › peer-review

Stability analysis of interval type-2 sampled-data polynomial fuzzy-model-based control system with a switching control scheme

Chen, M., Lam, H.-K., Xiao, B., Zhou, H. & Xuan, C., 10 May 2024, In: NONLINEAR DYNAMICS. 112, 13, p. 11111-11126 16 p.Research output: Contribution to journal › Article › peer-review

A Hybrid GCN-LSTM Model for Ventricular Arrhythmia Classification Based on ECG Pattern Similarity

Lin, Q., Oglic, D., Lam, H.-K., Curtis, M. & Cvetkovic, Z., 15 Apr 2024, (Accepted/In press) 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC 2024: IEEE. 4 p.Research output: Chapter in Book/Report/Conference proceeding › Conference paper › peer-review

Stiffness evaluation of a novel ankle rehabilitation exoskeleton with a type-variable constraint

Wang, T., Lin, Y. H., Spyrakos-Papastavridis, E., Xie, S. Q. & Dai, J. S., Jan 2023, In: Mechanism and machine theory. 179, 105071.Research output: Contribution to journal › Article › peer-review

Fuzzy Observer-based Command Filtered Adaptive Control of Flexible Joint Robots with Time-varying Output Constraints

Su, J., Lam, H.-K., Liu, J. & Yu, J., 11 Mar 2024, In: IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS II-EXPRESS BRIEFS. 71, 9, p. 4251-4255 5 p.Research output: Contribution to journal › Article › peer-review

Anti-Attack Protocol-Based Synchronization Control for Fuzzy Complex Dynamic Networks

Wu, T., Ahn, C. K., Cao, J., Xiong, L., Liu, Y. & Lam, H.-K., 25 Feb 2024, In: IEEE Transactions on Fuzzy Systems. 32, 6, p. 3433-3443 11 p.Research output: Contribution to journal › Article › peer-review

Fuzzy Neural Network-Based Adaptive Sliding-Mode Descriptor Observer

Zhong, Z., Lam, H.-K., Basin, M. V. & Zeng, X., 1 Jun 2024, In: IEEE Transactions on Fuzzy Systems. 32, 6, p. 3342-3354 13 p.Research output: Contribution to journal › Article › peer-review

Observer-based fuzzy tracking control for an unmanned aerial vehicle with communication constraints

Kong, L., Liu, Z., Zhao, Z. & Lam, H.-K., 1 Jun 2024, In: IEEE Transactions on Fuzzy Systems. 32, 6, p. 3368-3380 13 p.Research output: Contribution to journal › Article › peer-review

Synchronous MDADT-Based Fuzzy Adaptive Tracking Control for Switched Multiagent Systems via Modified Self-Triggered Mechanism

Liang, H., Wang, W., Pan, Y., Lam, H.-K. & Sun, J., 9 Feb 2024, In: IEEE Transactions on Fuzzy Systems. 32, 5, p. 2876-2889 14 p.Research output: Contribution to journal › Article › peer-review

New stability criterion for positive impulsive fuzzy systems by applying polynomial impulse-time-dependent method

Wang, L., Zheng, B. & Lam, H.-K., 10 Feb 2024, In: IEEE Transactions on Cybernetics. 54, 9, p. 5473-5482 10 p.Research output: Contribution to journal › Article › peer-review

Integrated Fault-Tolerant Control Design With Sampled-Output Measurements for Interval Type-2 Takagi-Sugeno Fuzzy Systems

Zhou, H., Lam, H.-K., Xiao, B. & Xuan, C., 2024, In: IEEE Transactions on Cybernetics. 54, 9, p. 5068-5077 10 p.Research output: Contribution to journal › Article › peer-review

Polynomial Fuzzy Observer-Based Feedback Control for Nonlinear Hyperbolic PDEs Systems

Tsai, S.-H., Lee, W.-H., Tanaka, K., Chen, Y.-J. & Lam, H.-K., 7 Feb 2024, In: IEEE Transactions on Cybernetics. 54, 9, p. 5257-5269 13 p.Research output: Contribution to journal › Article › peer-review

Double Asynchronous Switching Control for Takagi–Sugeno Fuzzy Markov Jump Systems via Adaptive Event-Triggered Mechanism

Zhao, Y., Wang, L., Xie, X., Hou, J. & Lam, H.-K., 1 Feb 2024, In: IEEE Transactions on Systems, Man, and Cybernetics: Systems. 54, 5, p. 2978-2989 12 p.Research output: Contribution to journal › Article › peer-review

Spatiotemporal Fuzzy-Observer-based Feedback Control for Networked Parabolic PDE Systems

Wang, J. W., Feng, Y., Dubljevic, S. & Lam, H.-K., 1 May 2024, In: IEEE Transactions on Fuzzy Systems. 32, 5, p. 2625-2638 14 p.Research output: Contribution to journal › Article › peer-review

Asynchronous switching control for fuzzy Markov jump systems with periodically varying delay and its application to electronic circuits

Zhao, Y., Wang, L., Xie, X., Lam, H.-K. & Gu, J., 16 Jan 2024, In: IEEE TRANSACTIONS ON AUTOMATION SCIENCE AND ENGINEERING. p. 1-12 12 p.Research output: Contribution to journal › Article › peer-review

An Obstacle Avoidance-Specific Reinforcement Learning Method Based on Fuzzy Attention Mechanism and Heterogeneous Graph Neural Networks

Zhang, F., Xuan, C. & Lam, H.-K., 19 Dec 2023, (Accepted/In press) In: ENGINEERING APPLICATIONS OF ARTIFICIAL INTELLIGENCE.Research output: Contribution to journal › Article › peer-review

Fuzzy SMC for discrete nonlinear singularly perturbed models with semi-Markovian switching parameters

Qi, W., Cheng, J., Park, J.-H. & Lam, H.-K., 20 Nov 2023, (Accepted/In press) In: IEEE Transactions on Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

LMIs-based exponential stabilization for interval delay systems via congruence transformation: Application in chaotic Lorenz system

Zheng, W., Zhang, Z., Lam, H.-K., Sun, F. & Wen, S., Nov 2023, In: CHAOS SOLITONS AND FRACTALS. 176, 114060.Research output: Contribution to journal › Article › peer-review

Stability analysis and L2-gain control for positive fuzzy systems by applying a membership-function-dependent Lyapunov function

Zheng, B., Wang, L., Xie, X. & Lam, H.-K., 1 Nov 2023, In: NONLINEAR DYNAMICS. 111, 24, p. 22255-22265 11 p.Research output: Contribution to journal › Article › peer-review

Stability and Stabilization of Fuzzy Event-Triggered Control for Positive Nonlinear Systems

Wang, Z., Meng, A., Lam, H.-K., Xiao, B. & Li, Z., 1 Nov 2023, In: International Journal Of Fuzzy Systems.Research output: Contribution to journal › Article › peer-review

News

King's Culture announces Creative Practice Catalyst Seed Fund recipients

King's Culture announce the recipients of a new creative practice seed fund, enabling researchers and academics to consolidate relationships with partners in...

“Interactive, fascinating, new experiences”: King's inspires future engineers at Success for Black Students outreach days

Academics, students and technicians came together to bring engineering to life for 64 London highschoolers at two Success for Black Students events.

King's welcomes The Princess Royal for official opening of the Quad

HRH The Princess Royal, in her role as Chancellor of the University of London, officially opened the Quadrangle (Quad) building at King’s College London.

Former Paralympian builds brighter future for prosthetic limb users with King's

Britain’s former youngest Paralympian tested prototype prosthetic arms to create better designs

COMMENT: NASA/MSFC Trump may cancel Nasa's powerful SLS Moon rocket – here's what that would mean for Elon Musk and the future of space travel

Professor Yang Gao explores the implications of cancelling the SLS moon rocket.

Group lead

Contact us

If you have any questions or would like to inquire about working with us please get in touch.